Blog

This is Fabrice, Human Computer Interaction (HCI) researcher at PFN.

While automated systems based on deep neural networks are making rapid progress, it is important not to neglect the human factors involved in those processes, an aspect that is frequently referred to as “human in the loop”. In this respect, the HCI research community is well positioned to not only utilise advanced machine learning techniques as tools to create novel user-centred applications, but also to contribute approaches to facilitate the introduction, use and management of those complex tools. The information visualisation (InfoVis) community has started to shed some light into the “black box” of deep neural networks by proposing visualisations and user interfaces that help practitioners better understand what is happening inside it. PFN is closely following what is going on in HCI and InfoVis/Visual Analytics research and also aims to contribute in those areas.

PacificVis

The 11th IEEE Pacific Visualization Symposium (PacificVis 2018), which PFN sponsored and attended, was held in Kobe in April. Machine learning was well covered with several contributions in that area, including the first keynote by Prof. Shixia Liu of Tsinghua University on “Explainable Machine Learning” and the best paper “GANViz: A Visual Analytics Approach to Understand the Adversarial Game“, which followed in the footsteps of the best paper of IEEE VIS’17 about a visual analytics system for TensorFlow. Those contributions are closely related to Explainable Artificial Intelligence (XAI), an effort to produce machine learning techniques based on explainable models and interfaces that can help users understand and interpret why and how automated systems come to particular decisions or results. Whether those algorithms and tools will be sufficient to fulfil the right to explanation of the EU’s new General Data Protection Regulation (GDPR) remains to be seen.

CHI

The ACM Conference on Human Factors in Computing Systems (CHI) is the premier international conference of Human-Computer Interaction. This year it took place in Montreal, Canada, with attendance exceeding 3300 participants and an official welcome letter by Prime Minister Justin Trudeau.

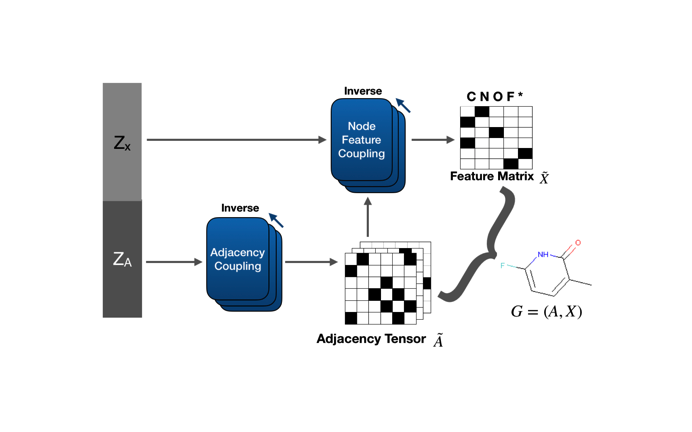

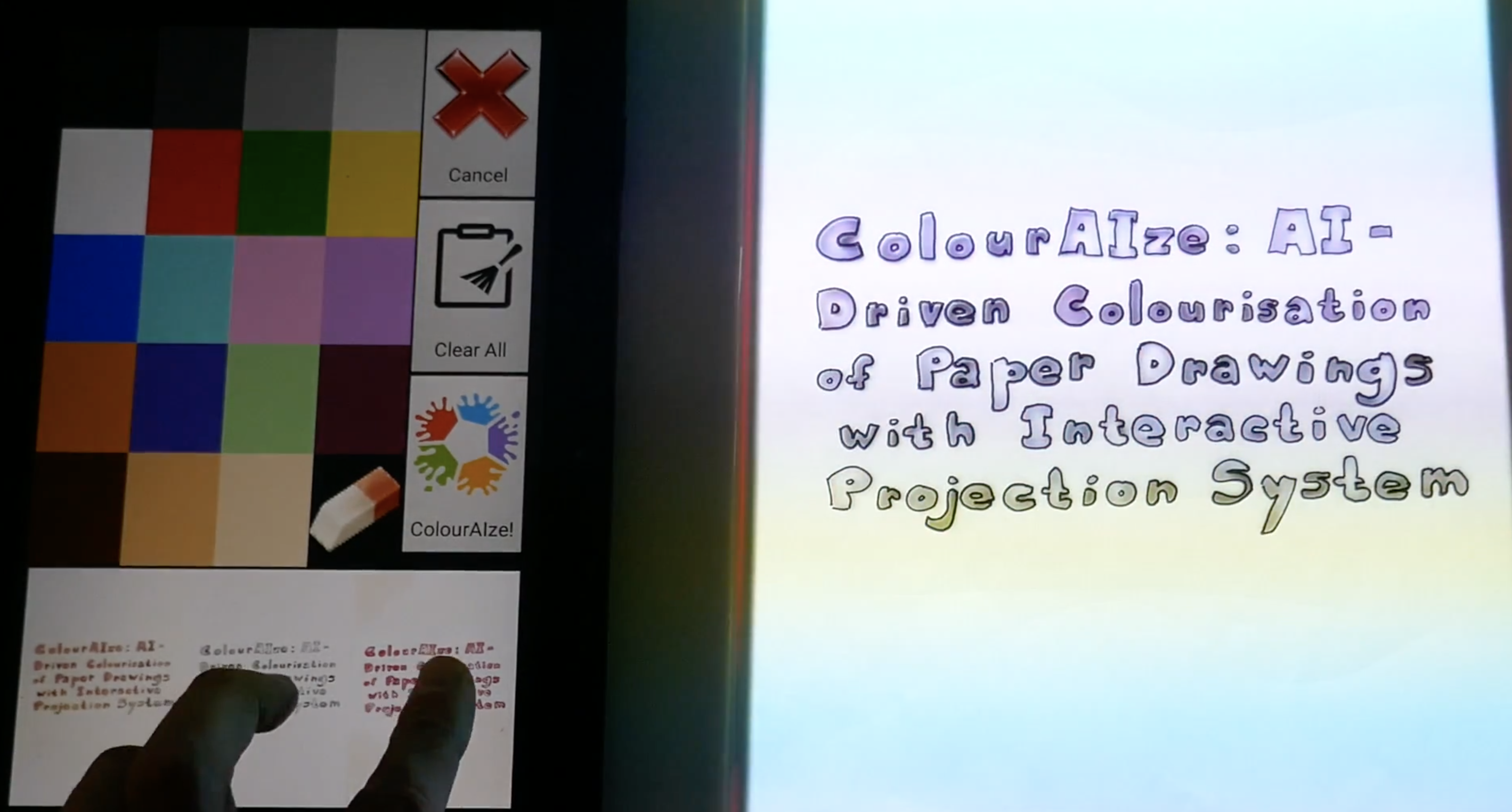

A common use of machine learning in HCI is to detect or recognise patterns from complex sensor data in order to realise novel interaction techniques, e.g. palm contact detection from raw touch data, handwriting recognition using pen tip motion and writing sound. With the wide availability of deep learning frameworks, HCI researchers have integrated those new tools in their arsenal to increase the recognition performance for previous techniques or to create entirely new ones, which would have been ineffective or difficult to realise using old methods. Good examples of the latter are systems enabled by generative nets. For instance, DeepWriting is a deep generative model that can generate handwriting from typeset text and even beautify or mimic handwriting styles. ExtVision, which is inspired by IllumiRoom, automatically generates peripheral images using conditional adversarial nets instead of using actual content.

Aksan, E., Pece, F. and Hilliges, O. DeepWriting: Making Digital Ink Editable via Deep Generative Modeling. Code made available on Github.

Two other categories of applications of machine learning that we increasingly see in HCI are for interaction prediction and emotional state estimation. In the former category, Li, Bengio (Samy) and Bailly investigated how DNNs can predict human performance in interaction tasks using the example of vertical menu selection. For emotion and state recognition, in addition to an introductory course by Lex Fridman from MIT on “deep learning for understanding the human”, two papers about estimating cognitive load from eye pupil movements in videos and EEG signals were presented. With the non-stopping proliferation of sensors in mobile and wearable devices, we are bound to see more and more “smart” systems that seek to better understand people and anticipate their moves, for good or bad.

CHI also includes many vis contributions and this year was no exception. Of particular relevance for visual exploration of big data and DNN understanding was the work by Cavallo and Demiralp, who created a visual interaction framework to improve exploratory analysis of high-dimensional data using tools to navigate in a reduced dimension graph and observe how modifying the reduced data affects the initial dataset. The examples using autoencoders on MNIST and QuickDraw, where the user draws on input samples to see how results change, are particularly interesting.

Cavallo M, Demiralp Ç. A Visual Interaction Framework for Dimensionality Reduction Based Data Exploration.

I should also mention DuetDraw, a prototype that allows users and AI to sketch collaboratively and which uses PaintsChainer!

Multiray: Multi-Finger Raycasting for Large Displays

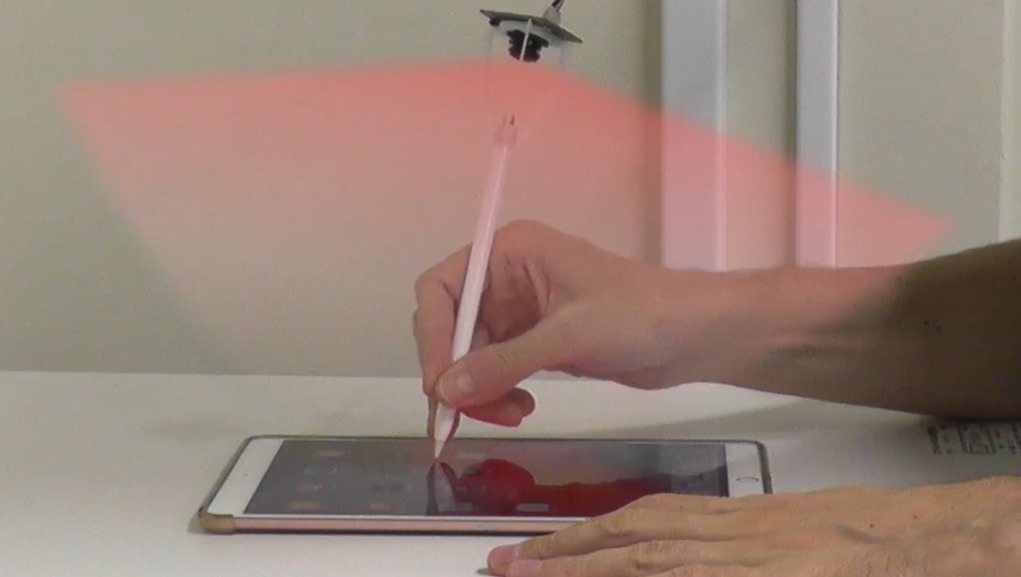

My contribution to CHI this year was not related to machine learning. It involved interacting with remote displays using multiple rays emanating from the fingers. This work with Dan Vogel, which received an honourable mention, was done while I was at the University of Waterloo. The idea is to extend single-finger raycasting to multiple rays using two or more fingers in order to increase the interaction vocabulary, in particular through a number geometric shapes that users form with the projected points on the screen.

[wpvideo rxbQ2sWS w=600]

Matulic F, Vogel D. Multiray: Multi-Finger Raycasting for Large Displays

Final thoughts

So far, it is mostly the vis community that has tackled the challenge of opening up the black box of DNNs, but being focused on visualisation, many of the proposed tools have only limited interactive capabilities, especially when it comes to tweaking input and output data to understand how it affects the neurons of the inner layers. This is where HCI researchers need to step up and create the tools to support dynamic analysis of DNNs with possibilities to interactively make adjustments to the models. HCI approaches are also needed to improve the other processes of machine-learning pipelines in which humans are involved, such as data labelling, model selection and integration, data augmentation and generation etc. I think we can expect to see an increasing amount of work addressing those aspects at future CHIs and other HCI venues.